How Meaningful is “Meaningful Human Control” in LAWS Regulation?

In recent years, the concept of “meaningful human control” (MHC) has emerged as a key consideration in regulating lethal autonomous weapon systems (LAWS). This standard seeks to ensure substantial human involvement in overseeing and directing the operational functions of LAWS while establishing a threshold for accountability. The MHC test has gained significant traction in the 2024 UN Secretary-General’s Report on States’ submissions regarding a new regulatory framework for LAWS.

Despite the increasing support for establishing MHC as the regulatory benchmark for LAWS, the test has not been without criticism. Notably, the United States, as the leading AI power, argues that “a focus on ‘control’ would obscure rather than clarify the genuine challenges in this area” (p. 115). This critique is rooted in both conceptual and operational concerns and has found support within international humanitarian law (IHL) scholarship (see here and here).

This post examines how regulatory discourse regulating LAWS frames MHC and explores its theoretical and operational implications. It argues that MHC has yet to establish a clear and enforceable standard for governing LAWS.

Origins of the Term

While human control as an ethical backbone of autonomous weapon systems (AWS) development seems to go back to early stages of concerns about AWS, MHC seems to have resulted from a 2013 policy paper discussing the “UK approach to unmanned systems.” In the sense employed in the paper, the term is taken as a connotation of Article 36 of Additional Protocol I (AP I), which requires States to conduct legal reviews of all new weapons, means, and methods of warfare in order to determine whether international law prohibits their use.

The use of the term MHC in the paper is confusing. Early in the paper, it calls on the UK government to “commit to, and elaborate, meaningful human control over individual attacks” (p. 3). In this sense, the term seems to resonate with the requirement of Article 36. However, in the very next part, the paper asks the government to “strengthen commitment not to develop fully autonomous weapons and systems that could undertake attacks without meaningful human control” (p. 4). In the latter use, MHC suddenly becomes a prohibitive test bordering on a definitional threshold for fully autonomous weapons systems. Later, one of the contributors in this early configuration stated that MHC meant to serve as a structural test rather than a physical actuality, hinting at “a set of processes and rules, rather than physical control over weapons systems.”

By then, however, the term had come to represent such a mantra in the AWS literature that it became a major term of art in the UN Convention on Certain Conventional Weapons discussions in May 2014. In the March 2023 session of the Group of Governmental Experts on lethal autonomous weapons, the use of MHC as a requirement by States advocating treaty restrictions on LAWS gathered momentum. In the 1 July 2024 UN Secretary General report, some preliminary indicators for the test were articulated.

Meaningful Human Control: Conceptual Framework

MHC is not a recognized term in the military domain, adding an additional layer of complexity to its application. For instance, the U.S. Department of Defense (DoD) Dictionary defines airspace control as “capabilities and procedures used to increase operational effectiveness by promoting the safe, efficient, and flexible use of airspace” (p. 13). By some stretch of imagination, such a technical understanding could be applied to MHC but for the presence of the terms “meaningful” and “human” as qualitative and categorical quantifiers.

Nonetheless, IHL scholars have made efforts to clarify the meaning of MHC. Different proposals define MHC differently (p. 4, 14-15). The general debate on the meaning of MHC has been shaped by the values and norms held by various actors advocating different definitions. One perspective frames MHC as a moral imperative, focused on ethical concerns and the need to preserve human dignity in warfare. Another approach views it as an operational test, ensuring the primacy of human agency in decision-making. A more legalistic interpretation sees MHC as a mechanism for compliance with international law rather than a fundamental restriction on AWS development (p. 145-46). These differing narratives shape the broader debate over how autonomy should be regulated in military contexts.

As two authors have pointed out, “[w]hat seems required is that behavior of the system (the human operators, and the complex system, including interfaces that support decision-making) covary with moral reasons of a human agent for carrying out X or omitting X” (p. 6). In other words, if human control is to qualify as meaningful, there must be an effect on the system’s behavior. While this interpretation could offer a potential path for accountability, it also introduces a significant limitation: human delegation. For AWS to be considered fully autonomous, they must retain the ability to delegate functions requiring human cognition to a machine.

Moreover, the emphasis on sufficient training for operators does not necessarily imply a specific degree or quality of control over LAWS. Rather, it serves as a qualifier for the operator’s competence rather than the system itself. Given these complexities, one commentator has suggested that the core question is whether “the human operator has sufficient degrees of situational awareness with regard to the system’s operation in its environment, as well as whether the system is functioning within appropriate parameters” (p .2).

This argument effectively shifts the debate away from the weapon system’s autonomy and toward the human operator’s role in managing it. The human operator is a latecomer to the interaction process with LAWS. The current application of MHC seeks to extend beyond operator authorization, making it a fundamental requirement in the design and development of autonomous weapon systems. This objective can only be achieved if the system’s design allows the operator to meaningfully influence and control its processes.

The International Committee of the Red Cross emphasizes that MHC should be applied specifically to the “critical functions” of LAWS (p. 7). Meanwhile, some States advocate for an even broader application, arguing that MHC should be maintained at all stages of LAWS operation, including design, development, target selection, and engagement (p. 38).

Meaningful Human Control in the UN Secretary-General’s 2024 Report

Recent submissions by States to the UN Secretary-General regarding the priorities of a potential treaty governing LAWS indicate a renewed effort by proponents of the MHC test to provide greater clarity on the issue. According to the UN Secretary-General’s report (p. 6-7), States identified the necessary elements of MHC, which include ensuring that humans retain the following:

– Sufficient information, including knowledge of the weapon system’s capabilities and the operational context, to ensure compliance with international law;

– The ability to exercise their judgment to the extent required by IHL;

– The ability to limit the types of tasks and targets;

– The ability to impose restrictions on the duration, geographical scope, and scale of use;

– The ability to redefine or modify the system’s objectives or missions;

– The ability to interrupt or deactivate the system.

The report also outlines several procedures that States must follow to fulfill these requirements. However, it still leaves several critical questions unanswered.

For instance, it remains unclear whether the reference to “the ability to exercise their judgment to the extent required by international humanitarian law” allows autonomous weapons to independently determine the extent to which AWS can make decisions without human oversight. On the human side, it is also uncertain whether “the extent required by international humanitarian law” refers exclusively to pre-existing IHL rules such as those governing targeting, proportionality, and military necessity, or whether it suggests the development of new rules specific to AWS. The latter remains an evolving area of law, and even if a new treaty is eventually adopted, it will likely take years before it becomes enforceable.

The elements outlined in the report conflate structural issues, such as compliance with IHL, with technical standards, such as the ability to redefine and modify a system’s objectives or missions. This approach does not necessarily account for the differences between various types of autonomous systems, namely munitions, platform-based systems, and operational systems. For example, once activated, munitions can autonomously select a target and are not returnable. However, because their initial activation is controlled by humans, accountability is relatively easier to establish (p. 28-29, 31).

The issue becomes more complex for operational systems, where there may be a considerable gap between human decision-making and the final execution stage. For instance, operational systems can undertake military planning in a way that entirely replaces human staff responsible for such decisions today (p. 98). Does MHC outright forbid the use of such systems, or does it impose an obligation to introduce safeguards such as “the ability to interrupt or deactivate the system?”

Some of these questions might be addressed through a two-tier approach to LAWS regulation, which “bans weapon systems that cannot comply with international law—such as systems incapable of distinguishing between combatants and civilians, or making proportionality assessments—while regulating systems that, despite featuring aspects of autonomous decision-making, can still comply with IHL.” However, MHC extends far beyond this approach. According to certain States, MHC is intended to ensure human control at all stages of LAWS operations, raising further questions about its feasibility as a regulatory standard (p. 6).

It is important to recognize that MHC may in itself create both legal challenges and operational complexities. For systems operating over long ranges, reliance on remote control can lead to failures, such as in the case of Russia’s Uran-9 Combat Robot in Syria, where the remote control function frequently malfunctioned. Additionally, the risk of human misinterpretation increases in remote environments where LAWS operate independently, raising concerns about reliability and oversight.

While MHC emphasizes the human element, the integration of cognitive interfaces in planning, target selection, and engagement can obscure the independent role of each actor involved in the process. This introduces complications for explainability and traceability in decision-making, issues that several States have identified as essential to the implementation of MHC.

It is perhaps for these reasons that the United States has taken the position that “[t]here is not a fixed, one-size-fits-all level of human judgment that should be applied to every context. Some functions might be better performed by a computer than a human being, while other functions should be performed by humans” (p. 115).

Meaningful Human Control: A Realistic Vision

MHC is an important precondition in the regulation of LAWS and is undoubtedly intended to counterbalance the perceived dehumanization of armed conflict (p. 20, 39, 40). It is also rooted in broader ethical concerns surrounding autonomous technology, echoing principles such as Asimov’s laws, which emphasize that robots should not cause harm to humans (p. 137).

However, in practice, MHC functions more effectively as a structural framework rather than an operational standard. Its application on the battlefield may not withstand the realities of combat situations. By analogy, this can be compared to the application of the effective control or overall control tests—standards used to attribute private conduct to a State for the purposes of determining State responsibility and armed conflict classification—to cases of individual criminal liability for aiding and abetting a crime. A test designed to define structural requirements is fundamentally different from one intended for operational decision-making.

In this context, a structural framework ensures that humans retain overarching authority over the legal and regulatory dimensions of LAWS by developing stringent and regular review procedures under Article 36 of AP I and implementing standards such as the two-tier approach at the design and development stages. This approach emphasizes proactive governance, where human oversight is embedded in the foundational principles of LAWS regulation rather than limited to real-time battlefield decisions. The structural test also implies that MHC is best suited to safeguarding human authority over the creation and enforcement of rules governing LAWS, which ensures that legal and ethical considerations shape the general parameters within which autonomous systems operate.

Rather than focusing solely on the modalities of human intervention in machine behavior, this framework prioritizes the integration of accountability measures, compliance protocols, and safeguards at a more general level, thereby reinforcing human agency in shaping the trajectory of autonomous warfare. Under this approach, Article 36 reviews and monitoring processes remain integral to MHC. Such an approach would allow for human control to be meaningfully exercised at specific stages, such as ensuring a functional understanding of the weapon system and incorporating context-specific safeguards before deployment.

Concluding Thoughts

The ongoing debate over what constitutes meaningful human control remains central to its regulatory framework. However, as international consensus builds, it is imperative that MHC is not simply accepted as a rhetorical ideal but refined into a well-defined, enforceable, and operationally viable concept. Without further conceptual and legal clarification, MHC risks becoming an ambiguous regulatory standard that fails to deliver either ethical protection or practical compliance in the field of autonomous warfare.

***

Masoud Zamani is a lecturer of International Law and International Relations at the University of British Columbia.

The views expressed are those of the author, and do not necessarily reflect the official position of the United States Military Academy, Department of the Army, or Department of Defense.

Articles of War is a forum for professionals to share opinions and cultivate ideas. Articles of War does not screen articles to fit a particular editorial agenda, nor endorse or advocate material that is published. Authorship does not indicate affiliation with Articles of War, the Lieber Institute, or the United States Military Academy West Point.

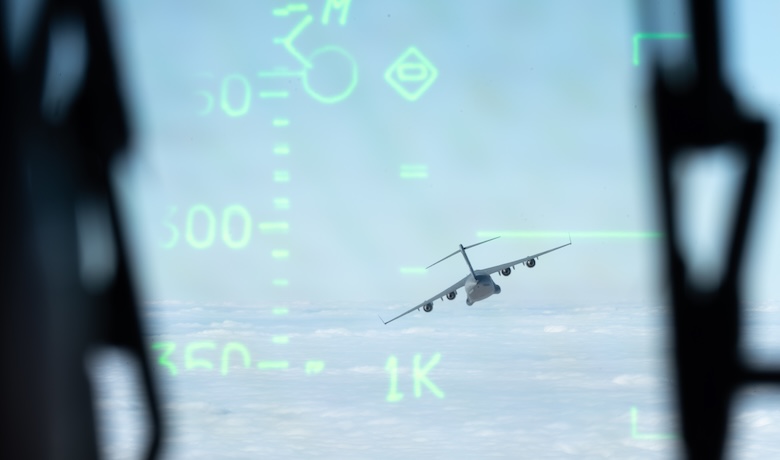

Photo credit: U.S. Air Force, Airman 1st Class Aidan Thompson